Section 1. The UCSF Health Problem

While pain after spine surgery is expected to resolve within a few weeks, a substantial number of patients experience post-operative pain for over 6 months1. At UCSF Health, nearly 15% of patients need opioids three months after spine surgery—an indicator of this chronic post-surgical pain (CPSP) that adds significant burden to both the surgical care team and the broader health system. When poorly managed, CPSP after spine surgeries leads to new or escalated chronic opioid use2, higher costs of care3, and worse post-operative outcomes4. However, only a small fraction of these patients at UCSF have been evaluated by the pain service for either pre-surgical optimization or post-operative pain management.

To address this problem, the Pain Medicine Department launched a transitional pain service, a specialized program providing multidisciplinary perioperative care with a tailored combination of preoperative optimization, perioperative pain management planning, and ongoing support during the outpatient transition. Several other institutions have successfully implemented the TPS, resulting in significantly improved pain control and opioid use in targeted patient cohorts5-7. However, given the limited capacity of this engaged service, a better method to select high-risk patients is essential to maximize the efficacy of a TPS8.

Current perioperative guidelines provide simple selection criteria, such as the O-NET+ classification, that rely on a few indicators like psychiatric conditions or previous opioid use to guide patient selection9. Given the interplay of the various biopsychosocial factors that contribute to the development of CPSP, these criteria fall short in identifying vulnerable patients with modifiable risk factors. An intelligent selection mechanism is needed to help our intended end-users—UCSF neuro-spine and pain providers—easily evaluate and identify high-risk patients for referral to the UCSF TPS that can improve patient outcomes and reduce health system costs.

Section 2. How might AI help?

Given the constrained clinical capacity of a TPS, it is essential to identify patients with the highest likelihood of developing CPSP. Excessive enrollment of patients at low risk of developing CPSP can dilute TPS efficacy, allocating care to patients unlikely to benefit and diverting resources from patients with true CPSP. We believe that an artificial intelligence (AI) tool is the best approach to identify TPS patients as AI can automatically analyze multiple factors simultaneously and provide clinicians with a more comprehensive risk profile. While numerous machine-learning models have been developed to predict post-surgical opioid use and pain trajectories10, none have been rigorously evaluated for their ability to guide referrals to a TPS.

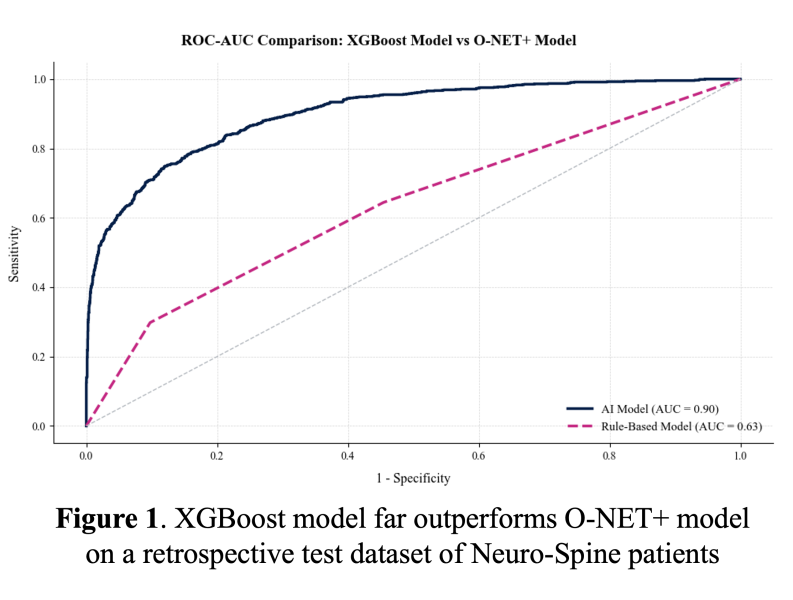

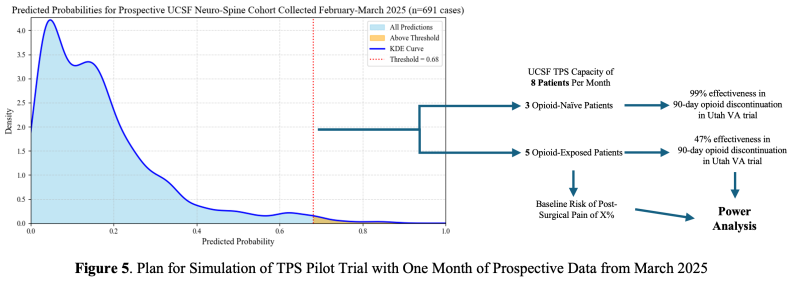

We previously retrospectively validated a decision-tree-based model using an eXtreme Gradient Boosting (XGBoost) algorithm with Neuro-Spine cases at UCSF from 2015 to 2023 at the pre-operative time of the surgical booking date. This model analyzes data pulled from UCSF Clarity, incorporating important features such as procedural details, prior medications, prior pain-related ICD diagnosis codes, and clinician prescribing patterns. The target population includes patients who were prescribed opioids between 30- and 180-days post-discharge and exhibited severe acute post-surgical pain trajectories, as determined by a simple linear regression classification of their in-patient pain score trajectory. This outcome definition was developed with pain physicians as the ideal TPS population, and we are now validating this rule-based criterion retrospectively against pain providers' clinical assessments of post-surgical pain syndrome. Using this outcome, the model showed superior performance at identifying patients with challenging postoperative pain control and opioid usage at the surgical booking date when compared to the O-NET+ tool from perioperative guidelines9 (Figure 1).  For comparison, the positive predictive value was 0.97 with our XGBoost model and 0.43 with the O-NET+ tool at the 0.68 threshold selected based on prospective validation results (Figure 5), highlighting our model’s superior specificity in identifying true high-risk patients compared to broader, less discriminating traditional criteria. Using the AI Pilot Award, we aim to silently prospectively validate this model to demonstrate that our model better identifies high-risk Neuro-Spine patients for TPS referral in the clinical setting compared to traditional methods.

For comparison, the positive predictive value was 0.97 with our XGBoost model and 0.43 with the O-NET+ tool at the 0.68 threshold selected based on prospective validation results (Figure 5), highlighting our model’s superior specificity in identifying true high-risk patients compared to broader, less discriminating traditional criteria. Using the AI Pilot Award, we aim to silently prospectively validate this model to demonstrate that our model better identifies high-risk Neuro-Spine patients for TPS referral in the clinical setting compared to traditional methods.

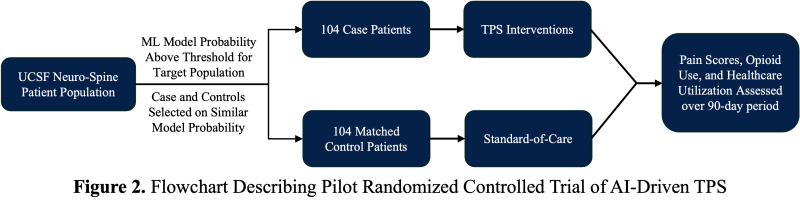

After confirming that the accuracy of prospective validation matches the retrospective results, we plan to initiate an intervention using model-generated probabilities to refer Neuro-Spine patients to the previously established UCSF TPS, collaborating with UCSF pain physicians to conduct a pilot randomized controlled trial of an AI-driven TPS. The model is critical to this trial’s success because a prior RCT of the TPS7 lacked power for long-term outcomes, largely due to inadequate patient identification using basic screening methods. In the trial, patients receiving the TPS intervention will be compared to standard-of-care controls matched on outcome probabilities predicted by the model. Assuming a conservative estimate that 50% of the patients identified by the model will develop post-surgical pain, a stratified power analysis with an alpha of 0.05, 80% power, and a 10% absolute event reduction indicates that 104 participants per arm are needed. (Figure 2). Ultimately, we hope that this pilot randomized controlled trial of an AI-enabled TPS for UCSF Neuro-Spine patients will generate evidence of the clinical safety, equity, and benefit of our approach.

Section 3. How would an end-user find and use it?

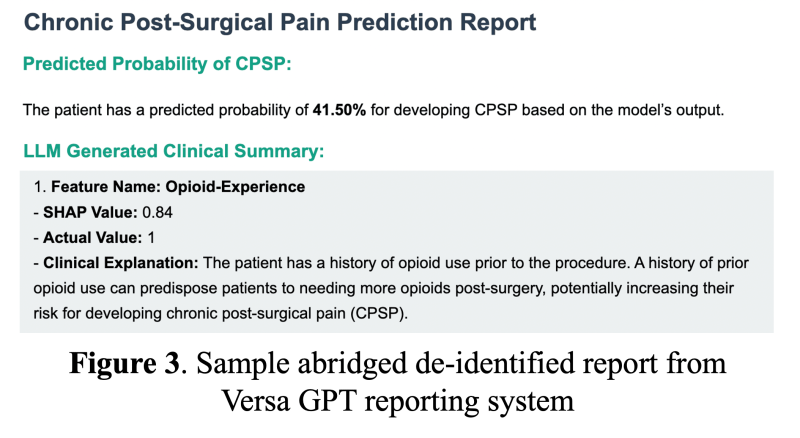

The model will be embedded into the clinical workflow by running the model each night on all surgical cases booked that day for future surgical dates, using real-time data from UCSF's Epic Clarity Instance. When a patient exceeds the risk threshold, an automated notification will be sent to both the neuro-spine surgical team and the TPS team. The exact notification method—whether via email, APeX in-basket message, secure chat, or phone call—will be determined in collaboration with the development team and through planned user testing. Regardless of the modality, the notification will deliver a TPS referral recommendation and support pre-surgical planning for patient optimization.  As an interpretability component of model results to promote clinician oversight, the notification will include a report generated by UCSF Versa GPT, a PHI-secure large language model (Figure 3).The report explains the rationale behind the model’s prediction using LLM-generated descriptions of the most important features for that patient. The surgical and TPS team will review this information and act on its recommendation—specifically, by initiating a referral to the TPS and coordinating perioperative pain management strategies. This low-friction, automated approach ensures the AI support is easily discoverable, actionable, and aligns with existing clinical workflows.

As an interpretability component of model results to promote clinician oversight, the notification will include a report generated by UCSF Versa GPT, a PHI-secure large language model (Figure 3).The report explains the rationale behind the model’s prediction using LLM-generated descriptions of the most important features for that patient. The surgical and TPS team will review this information and act on its recommendation—specifically, by initiating a referral to the TPS and coordinating perioperative pain management strategies. This low-friction, automated approach ensures the AI support is easily discoverable, actionable, and aligns with existing clinical workflows.

Section 4. Embed a picture of what the AI tool might look like.

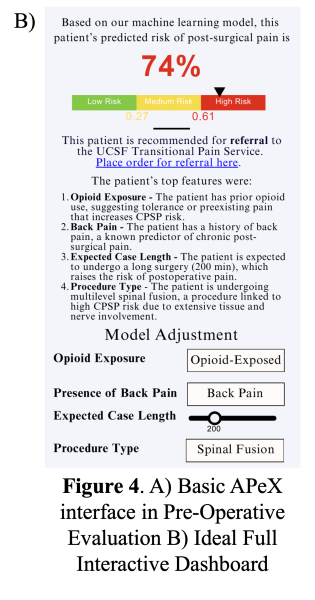

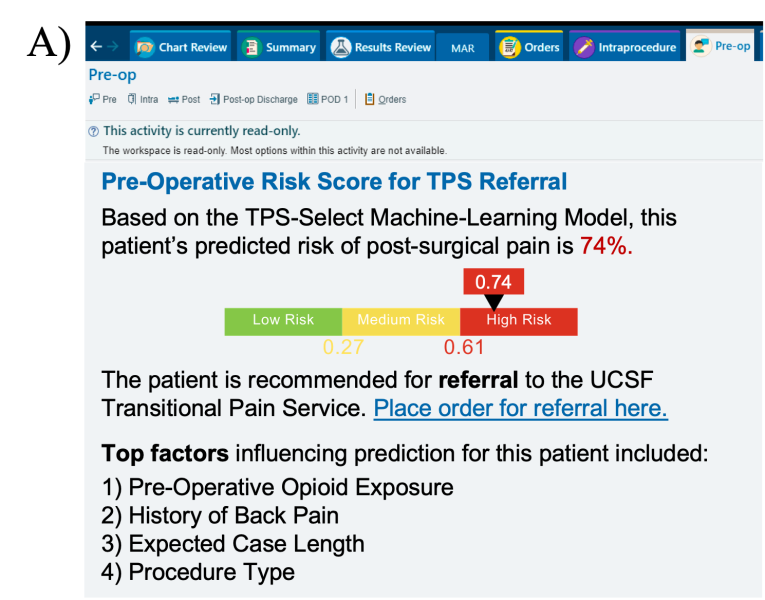

In APeX, we plan to have a simple interface that includes the predicted risk score along with the key features driving the prediction (Figure 4A).While this approach will support clinical decision-making around the patient’s risk for CPSP, we also ideally envision an interactive, in-depth dashboard that will require more resources in implementation (Figure 4B). This dashboard includes three core components: (1) a visual risk bar indicating the predicted CPSP risk level, (2) a direct-action link enabling clinicians to place an order for TPS referral when appropriate, and (3) a display of the top features influencing prediction for each patient, explained to the clinician by the LLM-generated report, with the option to adjust key variables and re-run the model as needed.

Section 5. What are the risks of AI errors?

Several types of AI errors could impact the effectiveness of our model and an AI-driven TPS. First, Classification Errors. Excessive false positives may dilute the TPS’s impact by allocating resources to lower-risk patients, and false negatives may inappropriately reassure care teams, leading to undertreatment of pain and missed patient concerns. We plan to monitor month-to-month variations in model performance, identifying features or patient clusters that may contribute to a less-than-optimal performance using our previously published toolkit of statistical methods11. To avoid confounding from the presently active TPS, we will also exclude TPS-referred patients from prospective validation; their low volume makes them unlikely to bias results. Second, Inequities in Model Performance. Disparities in prediction accuracy across race, gender, or opioid history could limit equitable access to care. We will track performance metrics stratified by these groups and aim to keep PPV differences within 10%. If differences exceed this threshold, we will address them using the statistical toolkit stated above. Third, Poor Calibration. A model that poorly balances opioid-naïve and opioid-experienced patients may reduce TPS effectiveness. Using the distribution of prediction scores of the prospective validation cohort, we can simulate month-to-month sampling to extrapolate how fluctuations in performance and cohort make-up will affect our likelihood of showing a statistical benefit. Using the effectiveness of previous TPS trials, this simulation can inform a power analysis for a pilot TPS trial and its predicted efficacy (Figure 5).

Section 6. How will we measure success?

We designed specific success criteria for the prospective validation of the model and the clinical trial. For model validation, measurements can be derived using data that is already being collected in APeX. The primary endpoint for clinical readiness is achieving a sensitivity of at least 0.4 while maintaining a PPV of at least 0.67. Currently, we far exceed the goal PPV and sensitivity at a threshold of 0.68, which allows us to flag a minimum of 8 patients per month. Secondary endpoints include: (1) chart review confirmation by the pain service of patients flagged as positive, (2) other traditional model performance metrics such as overall ROC-AUC and specificity, and (3) fairness measures, such as PPV differences across subgroups. We began prospective data collection in February 2025, and we will need AER and HIPAC support for future model implementation. Even in the absence of a formal trial, pain medicine clinicians may use the model’s predictions to inform care planning and patient optimization. In this case, we will also collect implementation metrics, such as the proportion of ML-identified high-risk patients referred to pain medicine. For the clinical trial of an AI-driven TPS, additional measurements will ideally need to be collected to evaluate success of the AI. The primary endpoint will be a reduction in incidence of post-surgical pain at 90 days by at least 10% among patients enrolled in the TPS compared to those receiving standard of care. Secondary outcomes include (1) patient-reported pain levels, (2) functional assessments, (3) opioid use (in MMEs), (4) healthcare costs at 30-, 60-, and 90-days post-discharge, and (5) clinician usage and feedback of the model’s APeX interface.

Section 7. Describe your qualifications and commitment:

Dr. Andrew Bishara, the project lead, has received a research career development (NIH K23) award to develop and implement AI models that predict a range of perioperative outcomes, with current efforts for validation and implementation underway at UCSF. Dr. Bishara will commit 10% of his time to supporting the development and implementation of this AI algorithm with the Health AI and AER teams. Dr. Christopher Abrecht, the co-lead, works closely with UCSF neurosurgery, seeing patients alongside them at the Spine Center and launching a preliminary TPS to support their patients. Dr. Abrecht is also committed to advising the implementation of the machine-learning model and supporting the development of an AI-driven TPS.

Comments

This seems like a good idea

This seems like a good idea for how to traige and prioritize patients that might benefit from the TPS, and I like the proposed RCT design. How will you prospectively validate the accuracy of the prediction when the TPS service is currently active? You won't really be able to tell what would have happened to the patient WITHOUT the TPS service if they truly got the service, will you?

Dr. Pletcher, great point—you

Dr. Pletcher, great point—you’re right that the presence of the active TPS service introduces a confounding factor in prospective validation, since outcomes may be altered by the intervention itself. This may make it difficult to assess what would have occurred without TPS involvement. To address this, we plan to exclude patients referred to the TPS from prospective validation. Given that only about two patients per week are referred, this exclusion is unlikely to significantly impact the performance metrics. In addition, we believe that current TPS referrals are influenced in part by personnel and service logistics—not solely by a patient’s risk for chronic pain. Therefore, we are reassured that excluding these patients will not disproportionately remove the highest-risk individuals, and our prospective validation will still accurately reflect the population entering the planned randomized controlled trial.