1. The UCSF Health problem.

Diagnostic errors are common and harmful. Previous work from our group suggests a missed or delayed diagnosis takes place in 25% of patients who die or who are transferred to the ICU; a diagnostic error is the direct cause of death in as many as 8% of deaths.

Our research team, as part of a multicenter study called Achieving Diagnostic Excellence through Prevention and Teamwork (ADEPT) led by UCSF has been prospectively gathering information about delayed or missed diagnoses at UCSF Health since 2023, focusing this time on RRT calls, ICU transfers, and deaths on the Medicine service. ADEPT randomly samples 10 cases per month, using a two-physician adjudication approach to develop gold-standard case reviews which yield not only an assessment of whether an error took place but also the harms related to the error, and any underlying diagnostic process faults.

Our current data suggest a significant opportunity for improvement, with 12% of patients who died, had an ICU transfer, or RRT call having a diagnostic error. Harms were substantial – with 17% of errors thought to be a cause of death, and 40% producing temporary or permanent harm (such as need for additional monitoring or testing, longer length of stay, or additional therapies). The most common diagnostic process fault was problems with assessment (e.g. anchoring on a diagnosis, or failing to recognize a stronger alternative), testing problems (not choosing the right test in a timely fashion). If we were to extrapolate the results from ADEPT to all 17000 admissions to UCSF Health, these numbers would represent more than 500 errors per year, of which 83 were a likely cause of death. Even if not causing death, 200 patients would have suffered longer hospital stays and additional and potentially unnecessary treatments.

2. How might AI help?

The field of patient safety in general, but diagnostic errors in particular, suffers from an inability of health systems to detect errors and opportunities for improvement at scale. Uniquely, diagnostic errors are extraordinarily hard to detect using administrative data, and determining underlying causes with any accuracy requires chart reviews such as our team carries out. AI tools, and LLM’s in particular, are a potentially groundbreaking approach to solving both problems.

Our current chart-based approach, while appropriate for research purposes as part of our ADEPT AHRQ-funded work, is time-consuming – taking up to 45” per case to finalize. A lighter-weight approach could be folded into existing case review programs (such as M&Ms) but would still need substantial support in determining underlying causes and developing an actionable set of priorities for leaders in Safety, or providers seeking to improve performance.

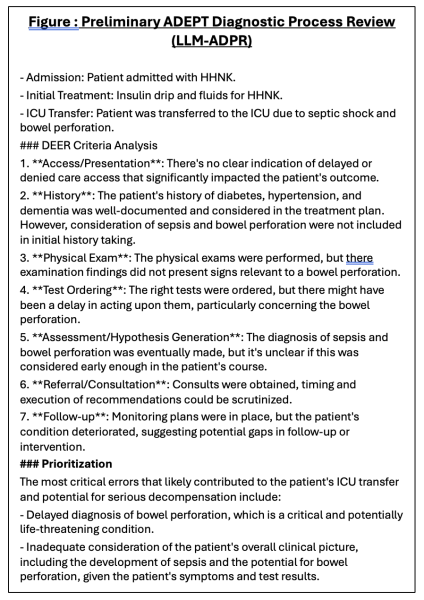

Preliminary data: We have recently begun work to develop llama-3 and GPT-4o LLMs with prompts derived by ADEPT diagnostic process review team (Figure). Prototype ADEPT Diagnostic Process Reviews (ADPR) utilize llama-3 and GPT-4o models (running in secure AWS cloud environment) against a Clarity-derived data model composed of all notes, results, orders, and vital signs (including intake/output) for the hospital stay, concatenated. Preliminary LLM-ADPRs are producing results that replicate our case reviews but are fairly unsophisticated; we are undertaking work now to improve clinical utility, and to determine correlation between our reviewers’ assessment and those of the LLM-ADPR.

Project plan: We propose to leverage the expertise of our case review teams, a large sample of more than 300 ‘gold standard’ cases, and pilot work we have currently underway using llama-3.1 and GPT-4o models leveraging EHR data gathered as part of ADEPT, to create an AI-powered diagnostic excellence learning cycle targeting Medicine patients.

Project plan: We propose to leverage the expertise of our case review teams, a large sample of more than 300 ‘gold standard’ cases, and pilot work we have currently underway using llama-3.1 and GPT-4o models leveraging EHR data gathered as part of ADEPT, to create an AI-powered diagnostic excellence learning cycle targeting Medicine patients.

By using ADEPTs existing case review process as a starting place to engineer prompts that replicate clinical adjudication and also monitor LLM-ADPR agreement with expert and users’ assessments, we will be able to generate diagnostic process-focused case summaries that replicate the evidence-based framework used in our research studies but at much lower time requirements. These summaries can then be used for at least three purposes:

1) As part of an enhanced UCSF mortality review process, with the LLM summary added to existing reviews as a way to identify opportunities for physician and system performance improvement,

2) As part of an automated diagnosis cross-check/diagnostic time out provided electronically to clinicians after an RRT takes place.

3) As part of an automated self-assessment and feedback system employed after an ICU transfer, or patient death has taken place. This summary may be presented in individual cases, or as a summary of all events which took place during a clinical block.

As a first step, we will map our current data model into HIPAC data sources, and then link HIPAC EHR data to data from our chart reviews to create our validation dataset (we anticipate 375-400 cases being available. Using these data we will iteratively test prompts against selected encounters, while also identifying methods (e.g. RAG) for dealing with longer hospital stays. Once we achieve a high level of clinical reliability and accuracy in the derivation set, we will proceed to deploying enhanced Mortality Review, diagnostic cross-check, and self-assessment pilots focused on Medicine patients and leveraging the existing partnerships between our ADEPT team and UCSF Health leadership. Emblematic of this partnership: our ADEPT review group is now a subgroup of the UCSF Patient Safety Committee and ADEPT cases with harmful errors are submitted as Incident Reports.

3. How would an end-user find and use it?

We do not plan to embed these case summaries in the medical record but would generate ADEPT-LLM diagnostic process summaries as part of existing case review processes (option 1, above), or deliver them linked to a REDcap survey within 12 hours of an RRT call (Option 2, above), or via REDCap survey 1 week of ICU transfer or death (Option 3). For each use case, the LLM-ADPR will provide a framework for reconsidering the case and diagnostic process, along with survey questions which will permit the end-user to agree or disagree with the summary and offer clarifications.

4. Embed a picture of what the AI tool might look like.

A preliminary version of the LLM-ADPR summary is provided; the LLM-ADPR would be accompanied by a REDCAP survey (or embedded in the survey, itself). A key step in our pilot/validation step will be to increase usability ofour LLM-ADPR output in addition to clinical accuracy.

5. What are the risks of AI errors?

Given that our AI output will not be used to actively direct clinical care, risks of hallucinations (e.g. false positive results), or unclear/imprecise results on patient care are nearly zero. However, it is possible that these same issues could produce higher work burden on patient safety reviewers or end recipients.

We do not envision this case review summary being represented in Apex but presented to providers and Patient Safety review staff separately from clinical workflows. This design increases the safety of our program, increases feasibility (since compute power for entire encounters is limited, particularly for real-team computations), and maps towards a future state where diagnostic cross-check and case feedback tools can be inserted into workflows or clinical scenarios where context length, clinical need, and patient factors increase overall effectiveness.

6. How will we measure success?

While the ultimate goal of this program is to reduce diagnostic errors, harms of diagnostic errors, and in turn mortality, ICU transfers, and RRT calls, it is unlikely we will show an effect on these outcomes in a year.

For this study period, we will measure agreement between LLM-ADPR documents and our recipient (e.g. patient safety staff, clinicians whose patients have a trigger event) in terms of their agreement with the identified opportunities for diagnostic improvement, as well as usability of the LLM-ADPR. In the 10-12 cases/month reviewed by ADEPT case reviewers (ADEPT will run through September 2026), we will be able to calculate agreement statistics compared to gold-standard adjudications. Finally, we will estimate the work-hours saved for patient safety staff and clinical reviewers, time which can eventually be better used to address care gaps rather than in gathering chart review data.

7. Describe your qualifications and commitment:

This program will be led by Andrew Auerbach MD MPH, PI of ADEPT and a leader of several informatics and implementation science-focused programs at UCSF, as well as our existing ADEPT Project Coordinator Tiffany Lee BA. Our team includes our current ADEPT review team (Drs. Molly Kantor, Peter Barish, Armond Esmaili), as well as informatics and LLM experts (Drs. Charumathi Subramanian and Madhumita Sushil); Dr. Sushil led development of the LLM-ADPR shown in Figure 2. Finally, our program has strong endorsement and support of Chief Quality Officer Dr. Amy Lu.

Comments

What you're proposing seems

What you're proposing seems like it could transform the way we assess the care we provide, and could scale up to provide feedback routinely to providers that could improve their skills and judgment. The system would have to be REALLY good, though - do you have prototypes? Can you iterate using data from Clarity to improve those prototypes offline?

Hi Mark - very sorry for the

Hi Mark - very sorry for the late reply on this.

We have a working prototype in llama-3 (example below) as well as GPT-4o and plan to use Clarity data for this exact purpose. In this way, we are positioned to run these reviews at regular (nightly, at time of Clarity copy-down) for both refinement of our prompts and validation work, as well as for our short term goal of doing active safety surveilance of events (ICU transfers, deaths, RRT calls) within 24 hours. We view our clarity/retrospective data infrastructure as the staging area for later broader uses of these same tools (e.g. for use after handoffs, or on daily rounds) or outside of Medicine patients.

Validation steps will be made possible through the existence of our current large body of validated chart reviews as well as through the activities of our case review teams, thereby allowing us to calculate traditional accuracy metrics prior to moving into broad and 'live' clinical use for our initial target events.

Our approach stands in stark contrast to differential diagnosis generation models, in that we also provide insights into not only the diagnoses which should be considered but also the possible cognitive or process (e.g. calling consultation) opportunities a clinician might have. Our team also is uniquely well trained to do ongoing monitoring and improvements of prompts due to its extensive experience in the areas of diagnostic excellence, patient safety, quality improvement, clinical informatics, and artificial intelligence.

Process opportunity suggestion:

Our current prompt simulates our case review process using both single shot and more advanced methods; we are developing these techniques in collaboration with Adam Rodman's lab at BIDMC and utilizing approaches which allow us to move context windows and chart views in ways that permit us to accomodate larger and smaller context lengths.

This is innovative, cutting

This is innovative, cutting edge patient safety work that will be transformative in being able to more meaningfully and feasibly detect diagnostic error that does not rely on (expensive) clinician manual review. Further work needs to be done to understand if AI can do this and this is the right team to lead this important work!

Interesting and meaningful

Interesting and meaningful use of AI tools to identify opportunities in patient safety and clinical reasoning and system failures / diagnostic errors that can benefit from improvement work

Terrific, important proposal

Terrific, important proposal by a team with deep expertise and support. As a former Director of Division of Hospital Medicine's Case Review Committee, I very much appreciate the extensive time/effort required for just creating an objective timeline for a subsequent comprehensive and valid case review/RCA. AI definitely has the potential to markedly expedite this process so human reviewers can focus efforts on the analytic component. Diagnostic errors have been a long-standing conundrum of how to best measure, analyze, and feed back to clinicians, and this would be an important step in addressing this universally important issue. Fully support!

This proposal is terrific and

This proposal is terrific and builds upon years of experience here at UCSF. The ability to efficiently scale a process (chart review) that is typically manual and time-intensive can both help identify improvement opportunities and create bandwidth for leaders to drive improvement work. This is extremely innovative work -- UCSF has an opportunity to create a national model with this project!

The existing infrastructure

The existing infrastructure and expertise that are already part of ADEPT present an incredible opportunity to move diagnostic excellence forward using AI. The proposed project is at the cutting edge of AI and diagnostic excellence, and is poised to explore uncharted -- yet vital -- territory.