Section 1. The UCSF Health Problem

Diagnostic error, the failure to establish or communicate an accurate and timely explanation of a patient’s health problem, affects 12 million people in the U.S. annually, leading to delays in treatment, potentially avoidable healthcare utilization, and increased morbidity and mortality.1

As a tertiary and quaternary referral center, UCSF and its clinicians face the ever-growing challenge of providing accurate and timely diagnosis for patients with high medical complexity. The task of achieving diagnostic excellence has grown more difficult not only because the corpus of knowledge for physicians to command continues to expand quickly, but because - as patients become more sick and more complex, and as the volume of information about them already contained in the electronic health record (EHR) increases - even the most capable of physicians are challenged to incorporate all of it into their diagnostic heuristics. Instead, many will examine just a limited set of recent EHR encounters by which to quickly familiarize themselves with the patient. This, however, can leave historical blind spots that might otherwise offer important contextual clues to aid in diagnosis. This is particularly important for complex patients not only who have greater co-morbidity burden, higher rates of polypharmacy and adverse drug events that may underlie or contribute to diagnoses, but for those with cognitive changes such as delirium and dementia, who may be less able to describe important elements of the history of present illness. LLMs, on the other hand, can examine vast quantities of the medical history from the EHR and synthesize, in near real-time, tailored diagnoses based on that information and new, evolving information associated with the current encounter.

Nationally, the diagnostic excellence movement, led by the Agency for Healthcare Research and Quality (AHRQ), and the recently defunct Society to Improve Diagnosis in Medicine (SIDM), has hungered for decades for tools with the potential to dramatically transform the field of diagnostic excellence. Large language models (LLMs), if appropriately integrated into the electronic health record and able to review the entirety of a patient’s history, lab results, imaging, medications, and problem lists, offer an unprecedented opportunity to advance diagnostic excellence and healthcare quality in a generational leap forward.

Section 2. How might AI help?

Although differential diagnosis (DDx) generators - machine learning, artificial intelligence (AI), and rules-based tools meant to aid the clinician in the diagnostic process by suggesting a list of potential diagnoses based on the information at hand - are not new,2,3 their performance has limited their widespread adoption. Many of these tools, often based on probabilistic models, utilize only patient symptoms for assessment, not taking advantage of documented physical exam, labs, imaging results and other structured and unstructured data typically available in an EHR.4LLMs on the other hand, including those using retrieval-augmented generation (RAG) that benefit from access to a large corpus of medical domain-specific knowledge, have demonstrated impressive diagnostic capabilities in clinical vignettes and in limited studies.5–9

A Tale of 2 LLMs not Realizing their Full Potential: While UCSF established national leadership by creating a HIPAA-compliant LLM gateway (Versa), and a RAG-enriched version based on content from one of the largest medical text publishers in the world (Versa Curate), the lack of APeX/HIPAC integration of these tools has limited the realization of their full clinical potential. Users wishing to take advantage of these tools at the point of care, for example, must open Versa in one application window, APeX in another, and copy/paste limited quantities of information from one to the other. Another widely used LLM tool, Open Evidence (OE), enjoys widespread adoption at UCSF, but is a standalone tool that is not yet HIPAA-compliant, nor integrated into APeX. As such, users often type in generic prompts to get relatively generic answers. (Table 1) Instead of copy/pasting just a fraction of information about a patient from APeX into Versa Curate, or manually typing generic information into OE, the  potential opportunities for differential diagnosis (as well as a vast array of other use cases that could be governed with “prompt order sets”) if these LLMs were integrated into APeX and could examine the entirety of a patient’s hospital encounter or outpatient records, are immense and scalable across nearly every UCSF specialty.

potential opportunities for differential diagnosis (as well as a vast array of other use cases that could be governed with “prompt order sets”) if these LLMs were integrated into APeX and could examine the entirety of a patient’s hospital encounter or outpatient records, are immense and scalable across nearly every UCSF specialty.

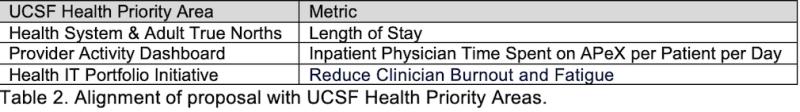

Primary Use Case: The primary use case for this proposal is to examine Versa Curate and OE as differential diagnosis generators that automatically review inpatient EHR data without manual prompting, and passively offer daily updated sidebar differential diagnoses. Developing HIPAA-compliance is an inclusion requirement for OE. If not met, then the proposal would proceed with Versa Curate alone. This use case impacts several high priority areas (Table 2).

Secondary Use Case: Once Versa Curate and OE are integrated into APeX, the secondary use cases, that will not specifically be explored in this proposal, are immense, ranging from offering guideline-based medical management recommendations, identification of care gaps, and even assessment and feedback to learners about their clinical notes.

Section 3. How would an end-user find and use it?

The target end-user, Hospital Medicine clinicians, would see the LLM-generated DDx list passively residing as a sidebar directly in the APeX workflow. (Figure 1) Because it is present to support the clinician, it would be purposefully visible but non-intrusive and non-interruptive.

Section 4. Embed a picture of what the AI tool might look like

Figure 1 shows the existing APeX workflow in which an LLM sidebar would contain a daily updated DDx list with the option to paste the list into the Progress Note and to like or dislike the DDx suggestions. This list would be generated and updated automatically on a daily basis without prompting. A user could choose from assistants or models (e.g. Versa Curate or OE).

Section 5. What are the risks of AI errors?

Although LLMs may make errors of omission, inaccuracy, and hallucination, the proposed use of AI for DDx generation poses relatively few risks because the DDx list is offered to the clinician as a suggestion of diagnoses to consider. It remains up to the clinician to decide whether and how to use the suggested information. However, users may report concerns with the “Report a concern” button to help investigators identify potential issues with the LLM DDx lists.

Section 6. How will we measure success?

Within two domains listed below, we will measure success across three categories, and compare between Versa Curate and OE: People, Process, and Outcomes. Although the specifics of the deployment will be discussed in detail with AER if funded, the goal will be to minimize friction to the existing clinical workflow that could impact adoption and user satisfaction. For this reason, we propose enabling the LLM-generated DDx list as a passive feature during the pilot for all Hospital Medicine providers with the option to minimize the sidebar for those who prefer (See Figure 1). We will measure success over the pilot period as follows:

- Measurements using data already being collected in APeX:

- Person and Process: As a measure of both adoption and usage, we will measure via the Clarity audit logs, the rate at which users copy/paste elements from the DDx list into their progress notes (copy/paste instances / total number of progress notes in which a DDx list is visible). We will compare this rate between Versa Curate and OE.

- Outcomes: Although the potential impact on healthcare outcomes remains speculative, we will measure the length of hospital stay for patients in which there has been at least one copy/paste event relative to those patients for whom there have been none, and compare using the Wilcoxon signed-rank test, with p<.05 considered significant. Secondarily, in anticipation of important potential future uses beyond the pilot (e.g. future incorporation of LLM-generated DDx workup recommendations), we will silently run prompts in the background for LLM-based DDx workup recommendations and compare them for accuracy to actual actions taken by clinicians in APeX.

- Measurements not necessarily available in APeX, but ideal to have:

- Person: As part of the suggested build (See Figure 1), we will measure the rate of Like/Dislike clicks made in response to the DDx list for each of Versa Curate and OE. We will also capture the rates of “Report a concern” for each model.

- Process and Outcomes: As above

Figure 1. Mockup of LLM-generated DDx list in APeX.

Section 7. Describe your qualifications and commitment:

- Dr. Benjamin Rosner, MD, PhD is a hospitalist, a clinical informaticist, an AI researcher within DoC-IT, and the Faculty Lead for AI in Medical Education at the School of Medicine.

- Dr. Ralph Gonzales, MD, MSPH is the Associate Dean for Clinical Innovation and Chief Innovation Officer at UCSF Health.

- Dr. Brian Gin, MD, PhD is a pediatric hospitalist, visiting scholar at University of Illinois Chicago, and chief architect/developer of Versa Curate.

- Ki Lai is VP, Chief Data & Analytics Officer of UCSF Health.

- Dr. Christy Boscardin, PhD, is the Director of Artificial Intelligence and Student Assessment as the School of Medicine and the champion behind Versa Curate.

- Dr. Sumant Ranji, MD, is a hospitalist and the Director of the UCSF Coordinating Center for Diagnostic Excellence (CoDEx).

- Dr. Travis Zack, MD, PhD is an Assistant Professor of Medicine in Hematology-Oncology, and is a Senior Medical Adviser to Open Evidence.

Citations

Comments

Congrats on this novel

Congrats on this novel proposal with high clinical relevance. It will be interesting ot learn how clinicians find the usefulness and accuracy of Versa Curate vs Open Evidence. Embedding and automating tools within the EHR shoudl benefit clinicians. How will the automatically generated differntial diagnoses or other clinical reasoning aids affect trainees' learning?

I like the idea. Trying to

I like the idea. Trying to make progress on diagnostic AI seems potentially very important, and I like the idea for how to get started. Do we have data on how often do UCSF clinicians use the wrong working diagnosis for a patient? Do we have any information about that? Is this solving a real problem?

Great question, Mark! You've

Great question, Mark! You've surfaced more of a big picture question than you may have anticipated, as this is the very quantification that diagnostic excellence researchers have struggled with for decades!

The real-world problem this is solving is one that AHRQ and the Society to Improve Diagnosis in Medicine (SIDM) have been working on for many years, but heretofore with just incremental steps forward. Namely, how do we, in fact, measure diagnostic excellence/accuracy. Hardeep Singh at Baylor / the VA has explained why so few health systems have a good measure for something we know is vastly under-recognized, stating that "The frequency of outpatient diagnostic errors is challenging to determine due to varying error definitions and the need to review data across multiple providers and care settings over time." https://pubmed.ncbi.nlm.nih.gov/24742777/ but a commonly cited figure is that 10-15% of medical diagnoses are wrong. https://tinyurl.com/mrx55fy6

Until now, we have not had particularly good tools to "know" or assess correct/incorrect diagnosis at scale, if a diagnosis is correct (See, for example, the repository we've built at GoodDx.org which lists all known tools that attempt to measure diagnostic accuracy). You'll note that are very few of them and most of them are extremely disease or condition-specific. That's in part, because we've not had access to a tool embedded in the EHR that is a "Gurpreet Dhaliwal" diagnostician.

In the broad reasearch community of diagnostic excellence, the closest form of hard data we've had for "correct" or "incorrect" diagnosis are from MedMal claims (e.g. CRICO data), but this is a very limited subset that have only risen to malpractice claims that vastly under-represents the scope of the problem.

The multi-center ADEPT study that is currently underway (Andy Auerbach leads) https://tinyurl.com/yeuyvffc attempts to quantify this by identifying a limited subset of patients (e.g. inpatients with codes, rapid responses, etc), but still requires human adjudicators. So, in fact, measuring "wrong diagnosis" at UCSF, as in other health systems, has been extremely challenging and highly limited.

But interestingly, the tools described by this proposal, could both help us better quantify that (we'd have an opportunity to be among the first to actually quantify "diganostic performance" at scale), as well as improve care delivery quality and timeliness at the same time.

In addition, according to the

In addition, according to the definition, diagnostic performance is not just about accuracy, but it is also about timeliness. A crucial element of the proposal is to speed the timeliness of diagnosis by surfacing potential diagnoses early; something that is particularly important in the inpatient setting.

Super excited for this

Super excited for this amazing proposal and idea! Great way to leverage UCSF resources optimally to address two critical mission areas: clinical and education. Based on the latest studies, we would expect this to create more efficiency and increase accuracy of diagnosis/management and decreasing medical errors - especially for our trainees (residents/fellows). Super excited for this important work! I imagine that this will significantly increase the utility and capabilities of Versa for clinical work.

This proposal offers a great

This proposal offers a great opportunity to enhance the precision of AI responses by automatically including the context of both the provider and patient through the integration of Epic with the LLM. The context can be derived from patient electronic records as well as the provider’s education level, specialty, diagnosis, order history, and other relevant information.

I noticed this is very

I noticed this is very similar to "Integrating OpenEvidence LLM into UCSF Epic for Evidence-Based Clinical Decision Support," Is there an opportunity to collaborate?

Agree with this astute

Agree with this astute observation (with advantage that this proposal also includes a comparison with Versa) and had same question about potential for synergy/collaboration!

with the ever expanding (past

with the ever expanding (past and present ) amount of data collected per patient, this type of work is exactly what is needed to help comb throught it and serve some synthesized ideas and reminders to the clinicians to allow for true precision care. well done and looking forward to next steps as part of the importance of this is also the fact that it opens doors to so many other projects. excellent work

The approach of embedding the

The approach of embedding the tool within the EHR without the need for prompting is appealing by making the AI accessible and efficient. I am curious how you will approach rolling this out with trainees and studying the impact on their learning. Given versa curate can generate citations linking to its sources, adding hyperlinks to the diagnostic lists that could take the user to pertinent chapters to learn more could facilitate using the tool for deeper learning. Exciting project!

These are great suggestions,

These are great suggestions, Susannah! Yes, one of the many benefits of this integration would be to study trainee use and learning, among others. In part, we will do that by ascertaining who is copy/pasting differentials into notes, or mirroring the suggestions of the differential diagnosis generators. Given that there are so many diseases, especially those that we don't see regularly, that may be reflected by certain patterns in labs, physical exam findings, etc., having a DDx generator that can keep track of all of these patterns and suggest corresponding diagnoses, may help us arrive not only at more accurate, but more timely diagnoses.

I share excitement for this

I share excitement for this proposal and its focus on expanding and tightening the learning feedback loops into the clinical workflow and the EHR directly where the work is happening using the power of AI and LLMs. I also agree whole heartedly with the immense potential of the secondary use cases particularly the extension of this approach and tooling into the trainee and health profession student realm and how these tools could form the foundation for future learning and assessment activities.