Section 1: The UCSF Health Problem

Cochlear implants (CIs) have been restoring speech comprehension and auditory perception for individuals with moderate to profound sensorineural hearing loss, with studies consistently demonstrating significantly improved quality of life [1]. Despite the potential benefits of CIs, there is a huge disparity in identifying and referring patients for cochlear implant consultations – in 2022, it was estimated that less than 10% of individuals with qualifying hearing loss utilize cochlear implants [2]. This is due to a poor understanding of CI candidacy criteria and the lack of knowledge about CIs amongst primary care physicians, otolaryngologists, and audiologists, leading to under-referral of eligible patients and geographic disparities in access [3, 4].

Previous efforts have relied primarily on clinician judgment without systematic integration of audiometric data or objective measures into decision-making workflows, limiting effective referrals. Only within the last five years have the first papers about the application of AI and machine learning models to evaluate cochlear implantation candidacy been proposed outside of UCSF. However, these models have yet to be expanded into tools usable by both patients and medical professionals such as in electronic health record (EHR) alert systems [5,6,7]. For example, one of the current issues with audiometric data storage on the APeX EHR is the inability for APeX to automatically generate visually useful digital audiograms for providers reading patient notes.

The primary end-users of the proposed AI solution would include primary care providers, audiologists, otolaryngologists, and other clinicians involved in the early stages of hearing loss assessment, as well as any patients undergoing audiology testing.

Section 2: How Might AI Help?

Artificial intelligence offers a promising solution by automating the interpretation of audiometric data to enhance the accuracy and consistency of CI referrals. This project will utilize audiogram results and Consonant-Nucleus-Consonant (CNC) test scores from the de-identified UCSF Commons Database (2011-2024) to train machine learning (ML) models. In addition, factors such as insurance coverage, history of prior hearing aid use, and specialist referral history, along with demographic data such as age, sex, and preferred language, will be used as inputs.

The AI tool with be built with several modalities in mind. First, the tool will be designed to navigate the complex storage and organization of audiometric data on APeX, designed to convey the most important test values and to explain their significance to even primary care providers who may not have experience in interpreting audiometric test values. The highlight of this feature will be the automated conversion of audiometric data to an easy-to-read chart.

In addition to building an easy visualization modality, the AI model will be able to interpret the audiometric data to determine whether a patient may need to be considered for CI candidacy. This may be in the form of an alert on the EHR system for providers in a follow-up appointment or an automated educational message discussing what a cochlear implantation is and how one may benefit a patient flagged as a potential CI candidate. As a whole, the AI model will generate actionable, interpretable recommendations, thereby addressing the current gap in clinician knowledge and enhancing equitable patient referrals.

Section 3: How Would an End-User Find and Use It?

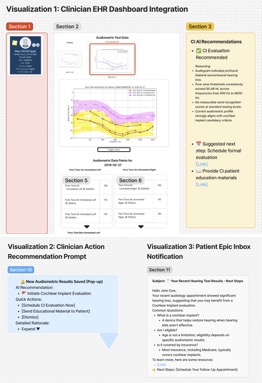

1) The AI tool will integrate directly within existing Electronic Health Record (EHR) systems (e.g., APeX). When audiometric test results are inputted, the AI model will automatically analyze these data points and generate a simple dashboard referencing updated referral criteria and predictive visualizations of patient audiograms, highlighting thresholds relevant to CI candidacy.

2) Clinicians will receive concise recommendations regarding CI candidacy and suggested actions, such as initiating a formal CI evaluation or providing patient education materials. The integration will occur at the point of audiometric data entry, streamlining workflow without additional steps required by clinicians.

3) Patients will receive short, informational notifications delivered to their Epic inbox about their audiology appointment yielding significant results not meeting expected hearing thresholds, with recommendations to follow up to see if they fit CI standards. This would also provide information on CIs and address misconceptions or frequently asked questions about CIs.

What the AI tool might look like,

What Are the Risks of AI Errors?

The implementation of an AI-driven audiometric assessment tool inherently presents several potential risks, including false positives, false negatives, and potential AI hallucinations. False positives, resulting in erroneous recommendations for CI referral, may lead to unwarranted patient anxiety, unnecessary clinical evaluations, increased healthcare costs, and decreased patient trust. Conversely, false negatives represent a critical failure to identify eligible candidates, thus perpetuating the current gap in CI referrals, delaying intervention, and negatively impacting patient outcomes.

Importantly, in this proposed application, AI is harnessed to organize data from audiometric testing and address knowledge gaps by applying well-defined CI criteria that will provide parameters that will limit the risk of hallucinations. Patients who are referred for CI consultations will be evaluated by human experts, providing accurate feedback to identify errors made by the AI model.

To systematically measure and mitigate these risks of AI hallucinations, the AI model will undergo rigorous retrospective and prospective validation against expert clinician recommendations. Performance metrics, including sensitivity, specificity, positive predictive value, and negative predictive value, will be monitored continuously. Regular audits and data-quality assessments will further identify discrepancies and anomalies indicative of AI hallucinations or other errors. Structured clinician feedback loops and ongoing model retraining and recalibration will ensure continuous performance improvements, minimizing errors and optimizing patient safety and clinical effectiveness.

Section 4: How Will We Measure Success?

To determine whether the AI-driven audiometric tool achieves its intended impact, success will be evaluated using a comprehensive measurement framework, addressing usage, clinical behavior change, patient outcomes, and equity considerations.

Measurements using data already collected in APeX:

- Frequency and percentage of clinicians interacting with the AI-driven recommendations after audiometric data entry.

- Rate of CI referrals generated pre-/post-AI implementation.

- Compliance rates with AI-generated recommendations among clinicians.

- Analysis of referral accuracy compared to historical clinician referral patterns.

- Time from initial audiometric evaluation to formal CI assessment referral.

- Demographic breakdown of referrals pre-/post-implementation to assess equitable changes in referral patterns across age, race/ethnicity, geographical location, and insurance type.

Additional ideal measurements to evaluate success:

- Clinician satisfaction and perception of AI tool usability, accuracy, and clinical value (via surveys and qualitative feedback).

- Patient satisfaction with the referral process and understanding of CI candidacy.

- Assessment of clinician knowledge about CI eligibility criteria pre-/post-AI implementation.

- Disparities in referral rates and clinical outcomes among different demographic groups (age, ethnicity, geography, and socioeconomic status).

Evidence required to sustain UCSF Health leadership support includes consistent improvement in CI referral accuracy, increased clinician adoption rates, improved patient outcomes, and enhanced equitable access to cochlear implantation services. Conversely, abandonment of the tool would be considered if substantial AI-driven inaccuracies persist, referral disparities worsen, clinician and patient dissatisfaction is consistently high, or negligible improvements in clinical outcomes are observed.

Section 5: Describe Your Qualifications and Commitment

Y. Song Cheng BM BCh, is a fellowship-trained neuro-otologist and CI surgeon at the UCSF Cochlear Implant Center. His research interest includes innovative CI technology, CI outcomes, and CI candidacy in the elderly. He has been active publishing on cochlear implant outcomes and within the field of hearing science for the past decade.

Nicole T. Jiam, MD, is the Director of the UCSF Otolaryngology Innovation Center and a neuro-otologist. She has extensively published on audiology disparities, AI-driven referral optimization, holds digital health patents, and currently serves on health tech advisory boards and guides AI tool development for cochlear implant candidate screening at UCSF. Dr. Jiam will actively guide the development of AI tools for cochlear implant candidate screening and participate in regular progress reviews with UCSF’s AER team.

Connie Chang-Chien, BS, is a UCSF medical student with a computational medicine research background at Johns Hopkins University, Mayo Clinic, and Fulbright research in Japan. She has helped with preliminary data analysis of audiometric data and design of the EHR mockup, and going forward with develop the models for audiogram interpretation and predictive CI referral.

Works Cited

1. McRackan TR, Bauschard M, Hatch JL, Franko-Tobin E, Droghini HR, Nguyen SA, Dubno JR. Meta-analysis of quality-of-life improvement after cochlear implantation and associations with speech recognition abilities. Laryngoscope. 2018 Apr;128(4):982-990. doi: 10.1002/lary.26738. Epub 2017 Jul 21. PMID: 28731538; PMCID: PMC5776066.

2. Marinelli JP, Sydlowski SA, Carlson ML. Cochlear Implant Awareness in the United States: A National Survey of 15,138 Adults. Semin Hear. 2022 Dec 1;43(4):317-323. doi: 10.1055/s-0042-1758376. PMID: 36466559; PMCID: PMC9715307.

3. Naz , T., Butt , G. A., Shahid , R., Jabbar , U., Mirza , H. M., & Kanwal , S. (2024). Awareness of Health Professionals about Candidacy of Cochlear Implant. Journal of Health and Rehabilitation Research, 4(1), 1417–1424. https://doi.org/10.61919/jhrr.v4i1.678

4. Nassiri AM, Marinelli JP, Sorkin DL, Carlson ML. Barriers to Adult Cochlear Implant Care in the United States: An Analysis of Health Care Delivery. Semin Hear. 2021 Dec 9;42(4):311-320. doi: 10.1055/s-0041-1739281. PMID: 34912159; PMCID: PMC8660164.

5. Carlson ML, Carducci V, Deep NL, DeJong MD, Poling GL, Brufau SR. AI model for predicting adult cochlear implant candidacy using routine behavioral audiometry. Am J Otolaryngol. 2024 Jul-Aug;45(4):104337. doi: 10.1016/j.amjoto.2024.104337. Epub 2024 Apr 23. PMID: 38677145.

6. Patro A, Perkins EL, Ortega CA, Lindquist NR, Dawant BM, Gifford R, Haynes DS, Chowdhury N. Machine Learning Approach for Screening Cochlear Implant Candidates: Comparing With the 60/60 Guideline. Otol Neurotol. 2023 Aug 1;44(7):e486-e491. doi: 10.1097/MAO.0000000000003927. Epub 2023 Jun 29. PMID: 37400135; PMCID: PMC10524241.

7. Shafieibavani E, Goudey B, Kiral I, Zhong P, Jimeno-Yepes A, Swan A, Gambhir M, Buechner A, Kludt E, Eikelboom RH, Sucher C, Gifford RH, Rottier R, Plant K, Anjomshoa H. Predictive models for cochlear implant outcomes: Performance, generalizability, and the impact of cohort size. Trends Hear. 2021 Jan-Dec;25:23312165211066174. doi: 10.1177/23312165211066174. PMID: 34903103; PMCID: PMC8764462.

Comments

You are proposing AI for real

You are proposing AI for real-time clinical decision support, I think. Does the decision support need to be real-time? Could an algorithm be run periodically across past/recent audiograms, and then trigger a short MyChart message to clinicians to consider CI?

There is often a time

There is often a time interval of several days or even a few weeks between obtaining/performing of an audiogram and the clinician interpreting the audiometric results. As such, an algorithm ran periodically would serve its purpose perfectly well.

What is the significance of

What is the significance of running the algorithm periodically vs in real time? Is there a notable difference in computing resources? Thank you.