The UCSF Health problem: Hospital discharges are among the most vulnerable transitions in patient care, where errors and miscommunication can lead to missed follow-up, patient harm, and readmissions. National data has shown that nearly 1-in-5 Medicare patients are readmitted within 30 days of hospital discharge, often due to missed appointments, unfilled prescriptions, or unrecognized clinical deterioration (Jencks et al., NEJM 2009;360:1418-28). Effective discharge instructions are essential for patient understanding and self-management post-hospitalization. However, research indicates that discharge summaries often lack key components, such as clear communication of pending follow-up tasks and pending test results, which are vital for the continuity of care (Chatterton et al., J Gen Intern Med. 2024;39(8):1438-1443 and Al-Damluju et al., Circ Cardiovasc Qual Outcomes. 2015;8(1):77-86). Although discharge summaries, after-visit summary (AVS) instructions and follow-up plans are documented, UCSF currently lacks a scalable, AI-powered solution to ensure patients adhere to these instructions and complete their post-discharge tasks, leading to potential gaps in post-discharge care.

We propose an AI-powered post-discharge checklist generator that:

- Parses discharge AVS instructions, discharge summaries, and the most recent consultant notes using a large language model (LLM) to identify actionable tasks for both the patient and the provider team.[1] [2] The goal would include cross-referencing these tasks with “standard of care” to reduce inconsistencies and omissions.

- Generates a real-time post-discharge checklist to ensure structured follow-up actions are clearly tracked within APeX.

- Shares post-discharge checklist updates with CTOP (Care Transitions Outreach Program) and outpatient providers to improve care continuity for recently discharged patients.

- Facilitates communication with the patient directly via both automated outreach and CTOP-specific communication to improve adherence to follow-up instructions.

Previous attempts using electronic health record (EHR) tools or manual discharge audits have failed to scale due to reliance on provider team memory or incomplete tracking. Our solution will augment–not replace–existing discharge processes by introducing structure and accountability, and ultimately aims to enhance patient satisfaction, patient safety, reduce readmissions, and improve coordination between hospital and outpatient teams.

How might AI help? Our approach will leverage LLMs and EHR data to parse discharge AVS instructions and discharge summaries to extract follow-up tasks, and automatically generate structured checklists to streamline discharge follow-up and improve care coordination. The system will:

- Parse discharge AVS instructions, discharge summaries, the most recent consultant notes using natural language processing (NLP) toidentify tasks (e.g., attend follow-up appointments, pick up medications, complete labs).

- Track completion of the “discharge checklist” by querying the EHR for relevant data (e.g., appointment attendance, lab or radiology orders, confirmation of prescription pick-up–where available)

- Provide automated real-time reports to CTOP and care coordination teams ( discharge providers and primary care providers with access to APeX can opt-in for their own auditing and quality improvement), to prompt targetted patient outreach when the checklist completion status is incomplete and outstanding tasks remain.

- Future extensions to include customizable patient nudges via interactive patient-facing chatbot support or augmenting LLM-generated discharge summaries for for outpatient providers or LLM-generated AVS summaries (see separate proposals submitted by Division of Hospital Medicine).

How would an end-user find and use it? Discharging physicians and interprofessional care team members can view the AI-generated post-discharge checklist embedded in the APeX workflow in the discharge documentation interface while completing their discharge summary. This real-time post-discharge checklist generation will:

● Help discharging physicians identify missing or unclear discharge instructions from pre-existing primary team and consultant notes.

● Cross-reference the AI-generated post-discharge checklist with the AVS to ensure consistency and completeness.

● Bothoutpatient primary care and the CTOP team will have real-time access to the updated post-discharge checklist. Key features include:

● Customizable patient tracking lists

● Ongoing monitoring: daily tracking of discharge checklist completion will be semi-automated (AI updates the checklist at least daily; secondary layer of confirmation by human users)

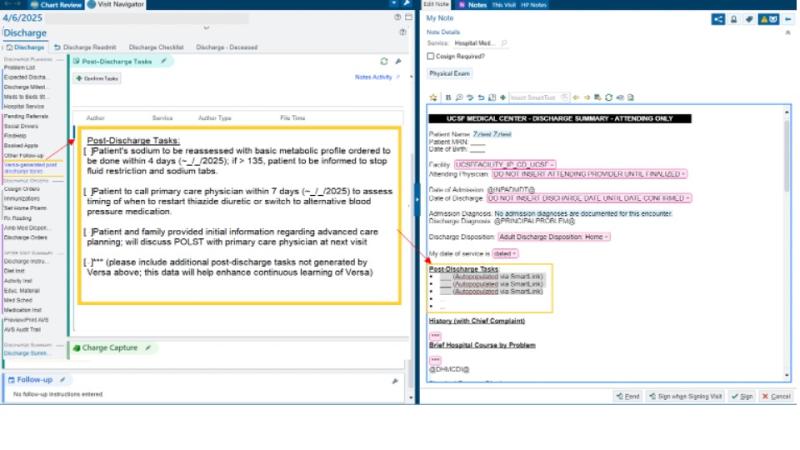

What the AI tool might look like.In our initial pilot, we envision adding a toggle button in the “Hospital Medicine SVC” contexts for the Parnassus campus The initial mock-up of this function is shown below with possible examples of AI-generated post-discharge checklist tasks:

Once the post-discharge tasks are confirmed by the discharging provider, we envision these tasks auto-populating via SmartLink into the discharge summary. Additionally, these post-discharge tasks will be added to the “To Do” column in the “PARN - 24h D/C” and “PARN - 4d D/C” System Lists, to ensure appropriate monitoring for the outpatient team and CTOP.

What are the risks of AI errors? Our AI-generated post-discharge checklist is designed as an augmentative support system rather than a provider of clinical guidance. Its primary role is to streamline best-practice, patient-centered communication after discharge. The post-discharge checklistswill be available in real-time, allowing providers to review, validate, and identify potential discrepancies, including:

● Ambiguous language in discharge summaries

● Inconsistencies between discharge summaries and the AVS

Potential AI Errors and Their Impact

- False Positives – the LLM may hallucinate or incorrectly interpret text in the discharge summary or AVS as a follow-up task when no such action is actually needed; if not verified by the discharging provider, this can lead to unnecessary CTOP outreach or patient notifications.

- False Negatives – the LLM may look at vague or ambiguous text in the discharge summary or AVS and overlook or miss tasks; if not verified by the discharging provider, this could create additional confusion for both the patient and outpatient providers.

Error Mitigation Strategy

● Provider oversight: real-time visibility allows discharging physicians to validate AI-generated post-discharge checklists and address discrepancies by editing prior to the patient’s discharge.

● Manual audits and feedback loops: Rreview will help identify systematic errors and refine AI model performance.

● Task prioritization: the AI is anticipated to adapt over time, prioritizing high-value checklist items (e.g., follow-up visit attendance) to improveclinical relevance.

How will we measure success? Once implemented, the tool’s effectiveness will be assessed by comparing pre- and post-implementation data, focusing on:

● Clarity and accuracy of checklist documentation via manual review

● Improvements in checklist completion rates compared to baseline risk model

● Impact on readmission rate compared to baseline risk model.

Qualifications and commitment: Seth Blumberg and Prashant Patel are attending hospitalists. Seth runs a research group focused on computational modeling in medicine, including modeling the clinical progression of hospitalized patients using EHR data. Prashant is a physician-educator with experience improving provider experience and interprofessional team collaboration to improve the efficiency of patient discharges.

Comments

Really interesting use of AI

Really interesting use of AI which keeps a human decision maker in the loop and whose output could improve interdisciplinary communication, patient / family understanding, possibly clinical outcomes. Is there any chance of integrating this into the proposals for DC summaries (Rosner/Raghu) +/- DC Instuctions (Ghandi/Muniyappa) for synergy?

Thanks David! Yes - I think

Thanks David! Yes - I think this project could integrae well with teh DC summary and DC instructions proposals. In fact, we have had the chance to chat with Drs. Bhatia, Gandhi, Muniappa, Rosner and others have all provided very useful feedback. I really like the open format of this ML demo form as it has stimulated great collaborative potential in that regard.

The specific way that I think I think the post-discharge checklist differs from DC summary and/or DC instructions generators is that it provides a structured way to both help patients understand what actions are recommended from them, and also provides a way to keep track of their progress/hurdles in accomplishing the prescribed tasks.

Can you clarify the current

Can you clarify the current process CTOP uses to track and help facilitate completion of follow-up tasks? How would this tool work into their existing workflow? And what if it's a patient that CTOP would not usually follow?

Thanks for the question Mark.

Thanks for the question Mark. There are a few ways that the checklist can facilitate CTOP's impactful work. First, it would provide a structured format for items that require follow-up, thus helping with efficiency and also allowing conversations / nudges with patients to be more specific. Second, completion of some tasks (e.g. scheduling of follow-up visits, prescription pick up, and discharge lab tests) can be automatically track. A summary dashboard can then help discharge transition teams prioritize whome to contact. Third, the same dashboard provides process data that can be used to quantitativelyprobe whether completion of certain tasks are more/less associated with better/worse outcomes. This would help guide future studies for improving discharge transitions.

The hope is that this checklist (combined with automated tracking of task completion) would improve CTOP's efficiency allo them to follow more patients. For patients that are not followed by CTOP, the discharge checklist would still be accessible by other discharge providers, including SNF teams and PCPs.

Hi Mark, great question! I'm

Hi Mark, great question!

I'm the lead outreach nurse for CTOP so I can answer any additional questions about our current process. Once a patient responds to CTOP’s automated outreach (via text or call) and indicates a post-discharge question or concern, the RN or pharmacist performs a focused chart review—specifically looking into the transitions domain the patient flagged (e.g., symptoms, medications, discharge instructions, follow-up plan).

Sometimes, follow-up tasks are clearly outlined in the discharge summary; other times, they may be buried in a consult or procedure note. When clear instructions are present, our nurses can assess and reinforce the plan—for example, asking, “Did you hear from the home health agency?” or “Did you have your labs drawn yesterday?”

For patients who do not respond to the automated outreach but meet certain criteria (e.g., age >85, primary language other than English), we place a manual call to assess for any care transition issues and to reinforce follow-up plans or tasks.

Our team documents each interaction using a SmartPhrase template in a “Pt Outreach” encounter and escalates any issues using service-specific triage algorithms.

More information on how our program operates is available in this article: https://pubmed.ncbi.nlm.nih.gov/34627715/ . I’m happy to answer any additional questions!

I can imagine there might be

I can imagine there might be a lot of value in having the AI try to clarify ambiguous plans. But how would the tool work with the back-and-forth required to clarify? Would the tool flag ambiguous plans and alert the clinicians, who tries to fix, and then reruns the tool? Seems like it could require a lot of complex programming to get that interaction ironed out?

Thanks Mark for noticing our

Thanks Mark for noticing our hopes using AI to clarify amibuity in the EHR. The concept here is that it would act a bit like a spell-checker. Information that is not consistent would be highlighted. Hovering over the highlight would provide specific information of where ambiguity lies. Providers could then choose to fix those ambiguities, verify which of multiple options is 'correct', or ignore.

This is a secondary feature though. The initial objective is simply to create the post-discharge list.

I like your idea to help

I like your idea to help promote streaming-lining the discharge process. This is one area full of gaps that can be closed.

Excited to learn more about this project