The UCSF Health Problem

Acute otitis media (AOM) affects millions of children each year and is the number one indication for antibiotic use in pediatrics,1 despite evidence that 85% of cases self-resolve.2 To combat antibiotic overuse and the potential for adverse events, the American Academy of Pediatrics3 and Centers for Disease Control4 recommend Safety Net Antibiotic Prescriptions (SNAPs). SNAPs are prescribed during the encounter with the intention that the prescription will be filled and used within 1-3 days only if the child’s symptoms fail to resolve or worsen. However, SNAPs are difficult to track because there is no structured designation in the order data that distinguishes them from standard prescriptions meant to start immediately (treatment today prescriptions, TTP). Thus, identifying SNAPs has historically required burdensome manual chart review, which makes it difficult to assess their epidemiological value.5

SNAPs are commonly used in pediatric care at UCSF, both in ambulatory and emergency settings, yet their use is not systematically tracked. Quality improvement initiatives require insight into how often SNAPs are prescribed and filled, which patient populations are more likely to receive or use them, and how prescribing patterns vary across clinicians in order to ensure equitable and effective antibiotic stewardship.

How Might AI Help?

Versa has already shown that it can help analyze disparities in pediatric antibiotic prescribing by automating labor-intensive chart review. In our pilot retrospective cohort study of pediatric AOM cases from 2021 to 2024, we found that Versa (utilizing GPT-4o) was able to accurately categorize 98% of treatment plans into “SNAP”, TTP”, or “Other” from physician notes as compared to the gold standard of human review by two board certified pediatricians.6 The model achieved a sensitivity of 95.9% and specificity of 99.1% for SNAP detection. We found that overall, 76.8% of antibiotic prescriptions were TTP, while 23.2% were SNAPs—and that non-English-speaking patients and those in the lowest SES quartiles were significantly less likely to receive SNAPs. These results indicate substantial opportunity to expand the use of SNAPs.

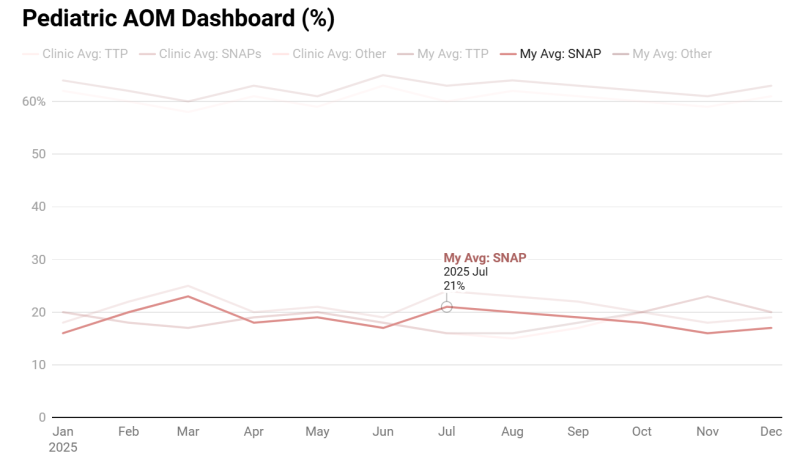

Building on this foundation, we propose the development of a live Versa/Epic-integrated dashboard that enables physicians to visualize their own prescribing patterns in real time. Versa will automatically review provider notes for all pediatric encounters (patients < 19 years old) that are flagged with an AOM ICD-10 diagnosis code H65, H66, H67.7,8 Each encounter will be classified as a SNAP, TTP, or other, and the results will populate the dashboard.

The primary visualization will display an individual provider’s SNAP versus TTP prescribing rates, benchmarked against their clinical department and the broader institution. Users will be able to toggle between percentage-based and absolute patient counts and view trends over time. Additional features will include filtering by English vs. non-English-speaking patients, race, ethnicity, and additional demographics. Based on their chart selections, users will be able to click to generate a workbench report that shows the individual encounters and the classifications that make up the data points they are viewing.

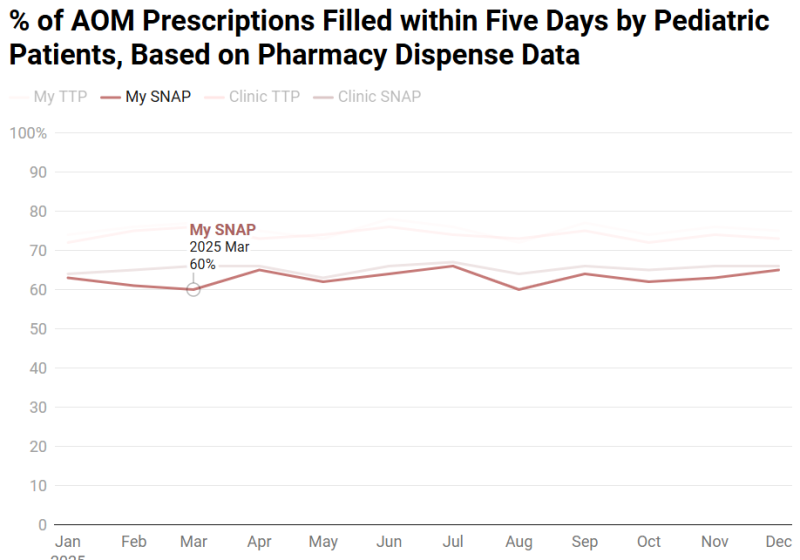

We have also linked pharmacy dispense data to patient encounters, enabling us to track whether and when prescriptions were filled. An additional dashboard view, should resources allow, could display individual provider SNAP and TTP pickup rates compared to clinic- and institution-level benchmarks, offering insight into the effectiveness of individual provider’s patient counseling and education. This allows providers to assess whether their patients are filling prescriptions as intended, particularly in the case of SNAPs which are education-intensive, and to refine communication strategies accordingly.

Finally, should Versa prove to be too expensive for this use case, our team also trained a small, local model, Clinical LongFormer, that demonstrated 93% accuracy. This model could be deployed on Wynton (UCSF’s high-performance compute cluster) in place of Versa should a more cost-efficient solution be desired.

While we are actively exploring developing a structured SmartSet in APeX to distinguish SNAP from TTP prescriptions, implementing and achieving consistent adoption across pediatrics, family medicine, and emergency medicine at multiple UCSF sites poses significant operational challenges. Our proposed AI-based approach offers a scalable, low-burden solution that enables both retrospective analysis for quality improvement and equity monitoring, and future validation of SmartSet use against what the AI determines from clinical documentation.

How Would an End-User Find and Use It?

The dashboard will be integrated directly into UCSF’s APeX EHR system into the relevant pediatric departments that consent to its use. Users will be able to see it on a tab adjacent to their inbox for quick and easy access between seeing patients. The default view will present each user’s prescribing patterns in the context of the clinical department in which they are currently logged in. The underlying LLM will run every seven days on all pediatric encounters associated with an AOM ICD-10 code in enabled clinical settings, and the dashboard will automatically update with new data every week. They will be able to toggle on relevant patient demographics such as language, insurance, etc. They may also select between seeing absolute patient numbers or percentages for their patients in terms of how their AOM treatment plan patterns compare to others and how often their patients are filling their prescriptions.

Embed a Picture of the AI Tool

Risks of AI Errors

There are risks such as false positives (incorrectly labeling a non-SNAP as a SNAP) and false negatives (failing to identify a SNAP). False positives could promote a sense of successful antibiotic stewardship when there is in fact a gap. Conversely, false negatives may result in missed opportunities to identify disparities and support quality improvement. While the model is not directly involved in treatment decisions, misclassification could impact provider benchmarking and the efficacy of equity-focused interventions.

To mitigate these risks, ongoing evaluation will be implemented to monitor for model drift, ensuring the AI tool maintains accuracy over time. This includes continuous performance monitoring and validation procedures to detect and address any degradation in model accuracy. A feedback mechanism will be established for clinicians to report discrepancies through the workbench reporting interface that shows individual encounters and their Versa classifications. This feedback will be reviewed and used to refine the AI model.

How Will We Measure Success?

Success will be measured through provider adoption and any observed impact on SNAP prescribing and dispense behavior.

Measures using data already being collected in APeX:

- Overall clinical rates of TTP and SNAP being prescribed before AI tool implementation

- Rate of TTP vs. SNAP use by provider

- Patient pickup rates of SNAP vs. TTP by demographic

Measures using other measurements ideally needed:

- Provider engagement metrics, including frequency and duration of dashboard use

- Equity metrics i.e. changes in SNAP prescribing rates among non-English-speaking and low-SES patients

- Change in SNAP prescribing rates among dashboard exposed vs. non-exposed providers (based on APeX audit logs when a clinician opens the dashboard more than once in the set time period)

- Provider satisfaction with the AI tool through Qualtrics surveys

Describe Your Qualifications and Commitment

Jessica Pourian, MD, is a Clinical Informatics Fellow and urgent-care pediatrician with experience in LLMs, operational AI implementations, antibiotic stewardship, and healthcare disparities. She is physician builder and Clarity certified. She is transitioning to a faculty position as Assistant Professor in the Department of Pediatrics and will have a 40% health system operational role as Physician Lead for Pediatric Informatics. She has led the SNAP Study, which uses AI to identify and address inequities in antibiotic prescribing for pediatric AOM. She is committed to the success of this project and will dedicate protected time to participate in regular work-in-progress sessions, collaborate closely with the Health AI and AER teams, and support the development, validation, and implementation of the AI algorithm in clinical workflows.

Valerie Flaherman, MD, MPH, is a Professor of Pediatrics and Epidemiology & Biostatistics with expertise in EHR-based research and clinical decision support. She brings expertise in clinical research, health services, and informatics, with a particular focus on leveraging EHR data for clinical decision support. Dr. Flaherman led the development of the Newborn Weight Tool (NEWT), a widely used digital tool built from EHR data on over 160,000 infants, and served as PI for the Healthy Start trial integrating CDS into Epic. She is also the Managing Director of the BORN Network, a national research collaborative. Her background in EHR-based intervention design and pediatric care makes her a key advisor on the development and implementation of the SNAP prescribing dashboard.

Raman Khanna, MD, MAS, is a Professor of Clinical Medicine and Medical Director of Inpatient Informatics. He co-chairs the Digital Diagnostics and Therapeutics Committee and leads efforts to integrate digital tools into the EHR, with a focus on clinical communication, decision support, and API-based innovation. Dr. Khanna is also Program Director of the Clinical Informatics Fellowship. His experience in deploying operational informatics tools across UCSF Health makes him a key collaborator in the development and implementation of the SNAP dashboard.

References

1. Hersh AL, Shapiro DJ, Pavia AT, Shah SS. Antibiotic prescribing in ambulatory pediatrics in the United States. Pediatrics. 2011;128(6):1053-1061. doi:10.1542/peds.2011-1337

2. Venekamp RP, Sanders SL, Glasziou PP, Del Mar CB, Rovers MM. Antibiotics for acute otitis media in children. Cochrane Database Syst Rev. 2015;2015(6):CD000219. doi:10.1002/14651858.CD000219.pub4

3. The Diagnosis and Management of Acute Otitis Media | Pediatrics | American Academy of Pediatrics. Accessed August 13, 2024. https://publications.aap.org/pediatrics/article/131/3/e964/30912/The-Dia...

4. CDC. Ear Infection Basics. Ear Infection. April 23, 2024. Accessed August 13, 2024. https://www.cdc.gov/ear-infection/about/index.html

5. Daggett A, Wyly DR, Stewart T, et al. Improving Emergency Department Use of Safety-Net Antibiotic Prescriptions for Acute Otitis Media. Pediatr Emerg Care. 2022;38(3):e1151-e1158. doi:10.1097/PEC.0000000000002525

6. Flaherman, V, Pourian, J. A SNAPpy Use of Large Language Models: Using LLMs to Classify Treatment Plans in Pediatric Acute Otitis Media. Under Review.

7. Vojtek I, Nordgren M, Hoet B. Impact of pneumococcal conjugate vaccines on otitis media: A review of measurement and interpretation challenges. International Journal of Pediatric Otorhinolaryngology. 2017;100:174-182. doi:10.1016/j.ijporl.2017.07.009

8. Hu T, Done N, Petigara T, et al. Incidence of acute otitis media in children in the United States before and after the introduction of 7- and 13-valent pneumococcal conjugate vaccines during 1998-2018. BMC Infect Dis. 2022;22(1):294. doi:10.1186/s12879-022-07275-9

Comments

I believe the AI in this

I believe the AI in this proposal is meant to assess whether an antibiotic prescription is SNAP vs. TTP (or other) using clinical notes. This simple categorization task could instead be accomplished by including a structured field, checkbox, BPA or some other way for the ordering clinician to indicate whether the prescription is SNAP or TTP. Can you compare the ease of implementation of this alternative non-AI approach to the same task, and pros and cons?

Hi Dr. Pletcher,Thank you for

Hi Dr. Pletcher,Thank you for

Hi Dr. Pletcher,Thank you for

Hi Dr. Pletcher,Thank you for

Hi Dr. Pletcher,Thank you for

Hi Dr. Pletcher,Thank you for

Hi Dr. Pletcher,Thank you for

The following is from Jessica

The following is from Jessica

The following is from Jessica

Hi Dr. Pletcher, Thank you

Hi Dr. Pletcher,Thank you for

.

.